Agents typically execute one or more services in the background of a system with system privileges sufficient to carry out their purposes. These services normally consume very little CPU resources except when requested to perform a major task. Usually, at least two services are running at any given time with other active services, depending on the architecture of the product. Vulnerability assessment agents are inextricably linked to the audit of the target, whereas appliances can be used for more than one audit method.

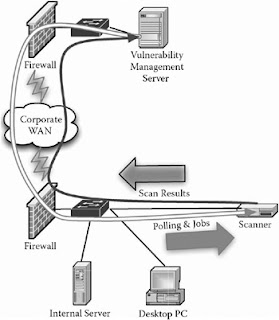

As shown in Figure 1, one service listens on the network for configuration and assessment instructions from a controlling server. This same service or an additional service may be used to communicate assessment results back to the server. The second service is one that performs the actual vulnerability assessment of the local host and, in some cases, adjacent hosts on the network.

The basic kinds of agents include the following:

- Autonomous: They do not require constant input and operation by another system or individual.

- Adaptive: They respond to changes in their environment according to some specified rules. Depending on the level of sophistication, some agents are more adaptive than others.

- Distributed: Agents are not confined to a single system or even a network.

- Self-updating: Some consider this point not to be unique to agents. For VM, this is an important capability. Agents must be able to collect and apply the latest vulnerabilities and auditing capabilities.

A VM agent is a software system, tightly linked to the inner workings of a host, that recognizes and responds to changes in the environment that may constitute a vulnerability. VM agents function in two basic roles. First, they monitor the state of system software and configuration vulnerability. The second function is to perform vulnerability assessments of nearby systems on behalf of a controller. By definition, agents act in a semiautonomous fashion. They are given a set of parameters to apply to their behavior, and carry out those actions without further instruction. An agent does not need to be told every time it is to assess the state of the current machine. It may not even be necessary to instruct the agent to audit adjacent systems.

Unlike agents, network-based vulnerability scanners are typically provided detailed instructions about when and how to conduct an audit. The specifics of each audit are communicated every time one is initiated. By design, agents are loosely coupled to the overall VM system so they can minimize the load and dependency on a single server.

The method of implementation involves one or more system services along with a few on-demand programs for functions not required on a continuous basis. For example, the agent requires a continuous supervisory and communication capability on the host. This enables it to receive instructions, deliver results, and execute audits as needed. Such capabilities take very little memory and few processor cycles.

Specialized programs are invoked as needed to perform more CPU-intensive activities such as local or remote network audits. These programs in effect perform most of the functions found in a network vulnerability scanner. Once completed, the information gathered is passed onto the supervisory service to be passed back to the central reporting and management server.

The detection of local host vulnerabilities is sometimes carried out by performing an audit of all configuration items on the target host in a single, defined process during a specific time window. An alternative approach is to monitor the configuration state of the current machine continuously. When a change is made, the intervening vulnerability assessment software evaluates the change for vulnerabilities and immediately reports the change to the management server. This capability is intertwined today in the growing end point security market. The detection of configuration changes and added capability of applying security policy blurs the relationships among end point protection, configuration compliance, and vulnerability audit. This combination will ultimately lead to tighter, more responsive security.